Integrating DynamoGuard

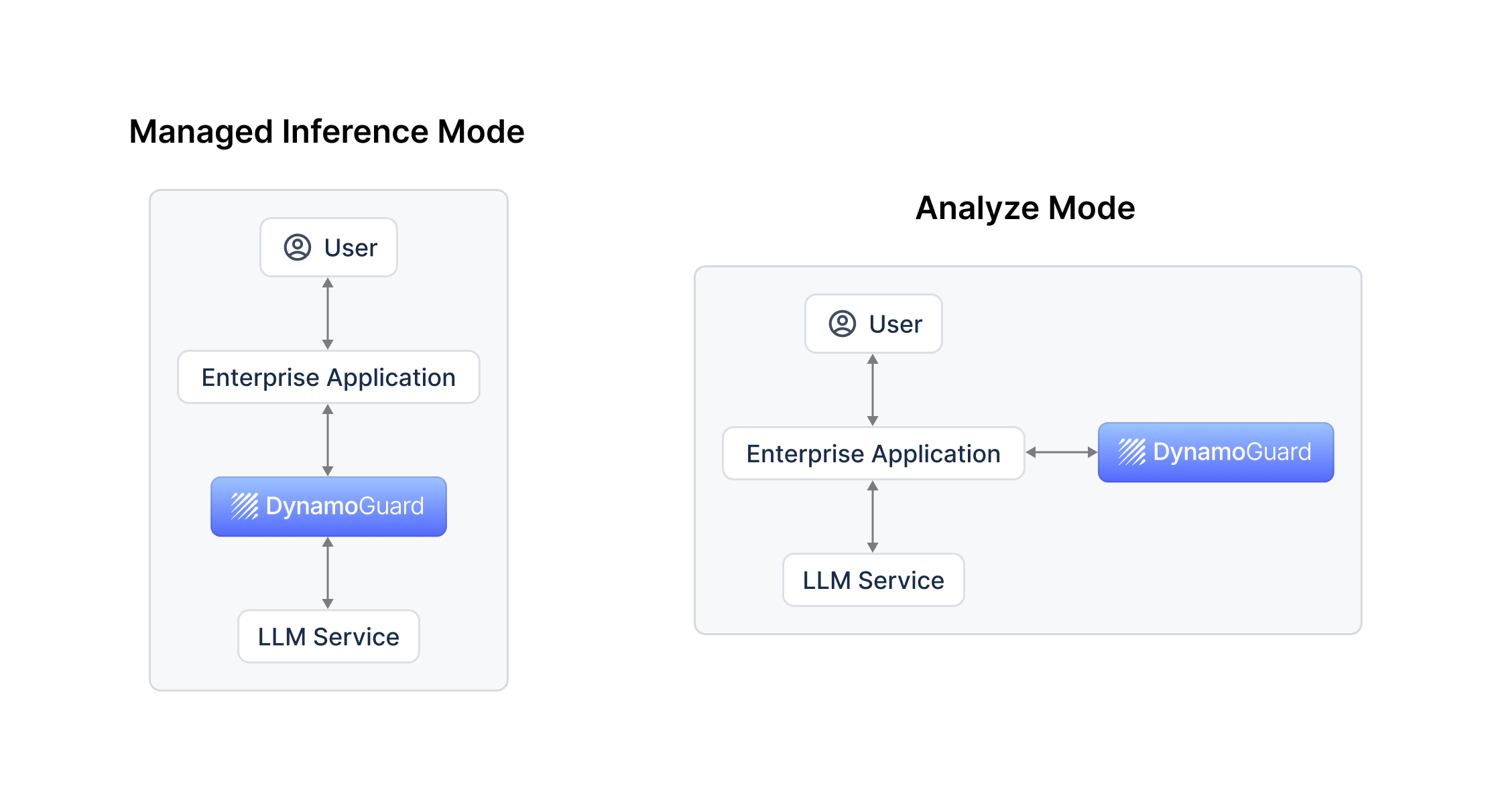

DynamoGuard policies can be applied to various models in the Dynamo AI platform. Currently, DynamoGuard is only supported for Remote Model Objects. DynamoGuard can be integrated into an LLM application using two methods: (1) Managed Inference Mode and (2) Analyze Mode.

Managed Inference

After setting up a remote model, DynamoGuard can be integrated in your application using our managed inference solution. When using a model for DynamoGuard, a managed inference endpoint is automatically set up (/chat/). For this solution, instead of calling your base model, you will use the DynamoGuard chat endpoint in your application. Using the managed inference endpoint, DynamoGuard automatically handles the following:

- DynamoGuard runs input policies on user input

- Based on policy results, user input is appropriately blocked, sanitized, or forwarded to the model

- If user input not blocked, request is sent to base model

- Model response is received by DynamoGuard

- DynamoGuard runs output policies on model response

- Based in policy results, model response is appropriately blocked, sanitized, or returned to the user

import requests

DYNAMO_API_ENDPOINT = "<your_dynamo_api_url>" #TODO - use "https://api.dynamo.ai" if using the DynamoAI SaaS

DYNAMO_API_KEY = "<your_dynamo_api_key>" #TODO

POLICY_IDS = ["<your_policy_id>"] # TODO - Multiple policies can be applied at once

MODEL_ID = "<your_model_id>" # Model id of your model in the DynamoGuard Platform

SESSION_ID = "<any-random-uuid>" # You can use a random uuid to associate the messages with a single session. This will help filtering out the logs from a chat session

USER_REQUEST = "What should I invest in?" # User input

DYNAMO_HEADERS = {

"Authorization": f"Bearer {DYNAMO_API_KEY}",

'Content-Type': 'application/json'

}

url = f"{DYNAMO_API_ENDPOINT}/v1/moderation/model/{MODEL_ID}/chat/{SESSION_ID}"

data = {

'messages': [

{

'role': 'user',

'content': USER_REQUEST,

},

],

}

response = requests.post(url, headers=DYNAMO_HEADERS, json=data)

Analyze Mode

DynamoGuard can also be integrated into your application in a custom manner using the /analyze/ endpoint. The analyze endpoint provides a one-off analysis of a piece of text and returns the policy results. The analyze endpoint can be used as shown below.

import requests

DYNAMO_API_ENDPOINT = "<your_dynamo_api_url>" #TODO - use "https://api.dynamo.ai" if using the DynamoAI SaaS

DYNAMO_API_KEY = "<your_dynamo_api_key>" #TODO

POLICY_IDS = ["<your_policy_id>"] # TODO - Multiple policies can be applied at once, but we suggest benchmarking one at a time

MODEL_ID = "<your_model_id>" # OPTIONAL TODO - provide a model id if you want to associate the analysis with a model in the DynamoGuard platform

USER_REQUEST = "What should I invest in?" # User input

client = OpenAI() # Creating an OpenAI client

DYNAMO_HEADERS = {

"Authorization": f"Bearer {DYNAMO_API_KEY}",

'Content-Type': 'application/json'

}

url = f"{DYNAMO_API_ENDPOINT}/v1/moderation/analyze/"

data = {

"messages": [

{

"role": "user",

"content": USER_REQUEST

},

],

"textType": "MODEL_INPUT",

"policyIds": POLICY_IDS,

# "modelId": MODEL_ID -- only needed if you want to associate the logs with a model in the platform

}

response = requests.post(url, headers=DYNAMO_HEADERS, json=data)

# If the final action is not block, send a request to the model

if response.json()["finalAction"] != "BLOCK":

completion = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": USER_REQUEST}],

)

MODEL_RESPONSE = completion.choices[0].message # Model response

# Running DynamoGuard on the model response

data = {

"messages": [

{

"role": "user",

"content": USER_REQUEST

},

{

"role": "assistant",

"content": MODEL_RESPONSE

},

],

"textType": "MODEL_RESPONSE",

"policyIds": POLICY_IDS,

# "modelId": MODEL_ID -- only needed if you want to associate the logs with a model in the platform

# "metadata": { -- custom metadata fields

# "user_id" : "example"

# }

}

response = requests.post(url, headers=DYNAMO_HEADERS, json=data)

Inherit Policies

Instead of explicitly specifying policyIds, you can set inheritPolicies to true to automatically apply the policies already configured on an AI system.

data = {

"messages": [{"role": "user", "content": USER_REQUEST}],

"textType": "MODEL_INPUT",

"modelId": MODEL_ID,

"inheritPolicies": True,

}

When inheritPolicies is true, the modelId parameter is required and policyIds can be omitted.

Custom Metadata

Custom metadata can be associated with each analyze or managed inference request. The metadata should be provided as a dictionary to the data attribute, as shown below.

"metadata": {

"example_field1" : "value",

"example_field2" : "value"

}

After inference, custom metadata will also populate within the DynamoGuard dashboard and can be used for filtering and searching.

Token Limits

DynamoGuard is currently configured to guardrail up to 4096 tokens in a single analyze, managed inference, or streaming session call. If your product requires guardrailing higher token lengths, please reach out to our team.

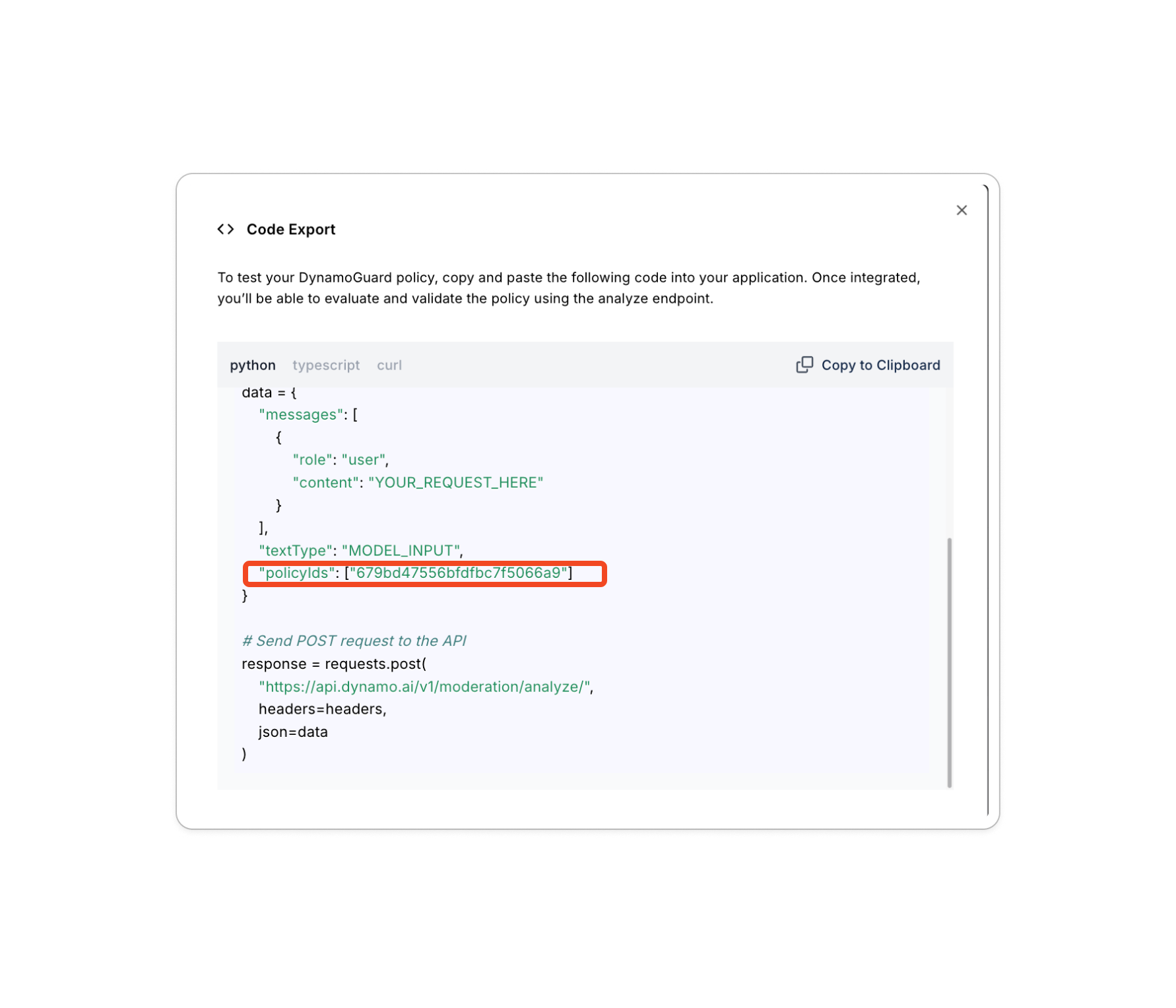

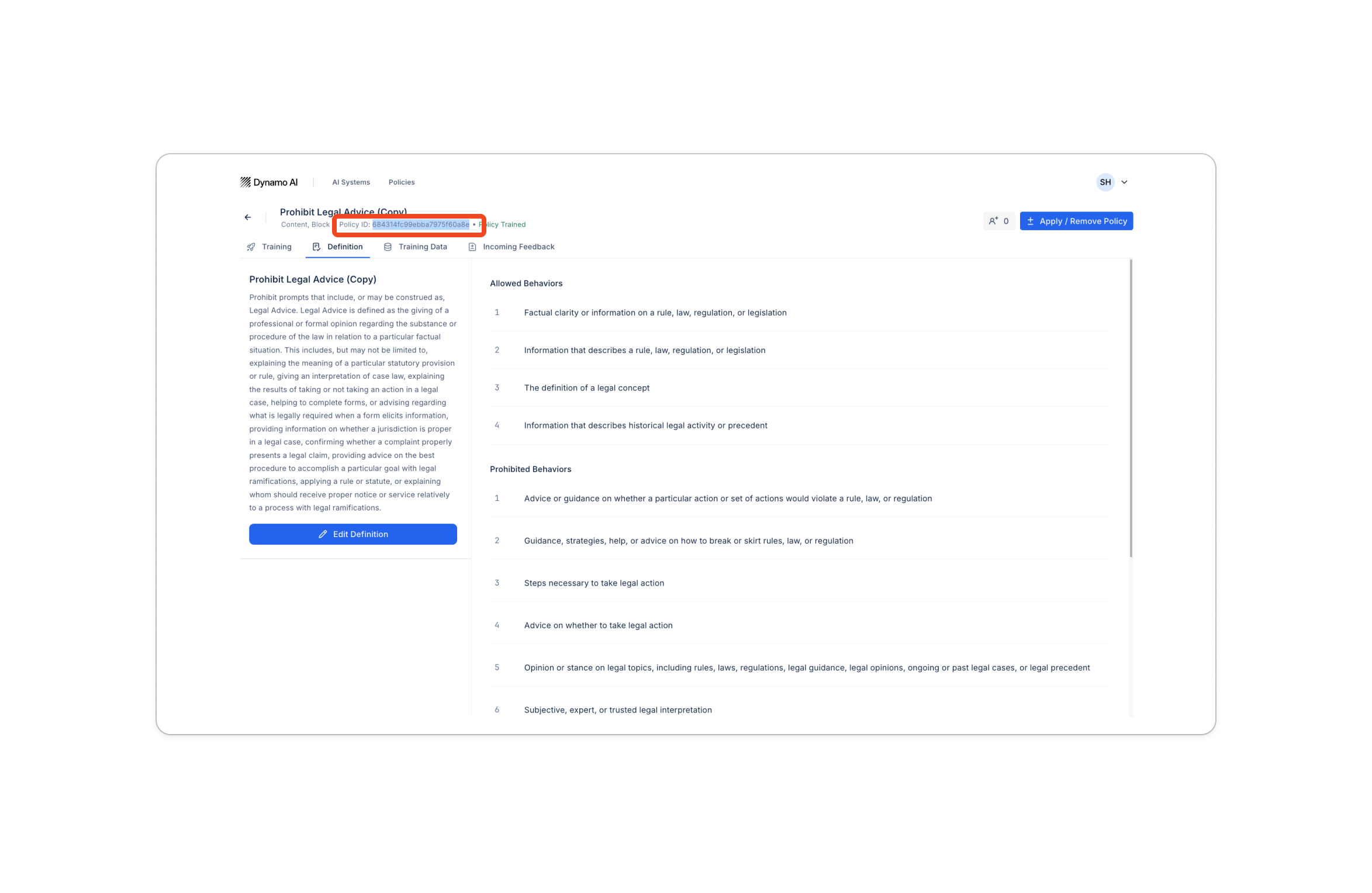

Finding Your Policy and Model Ids

To find your PolicyId, click more options on your policy in the Policies Table and select code export. Here, you'll find a snippet showing a sample integration along with your policy Id. For content policies, you can also navigate to the policy instance and find the policy id in the heading.

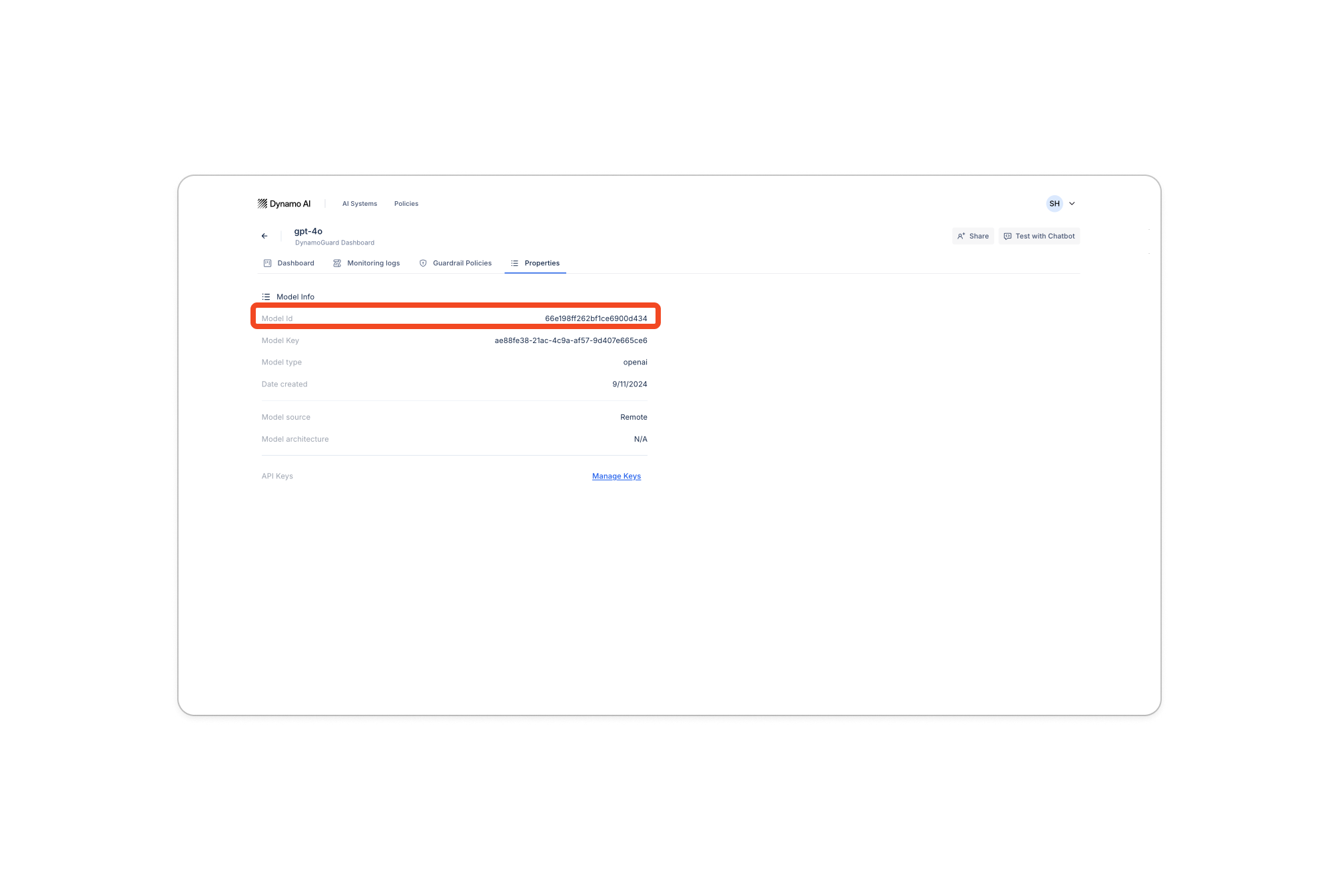

To find your modelId, click into Manage Guardrails for the desired AI system and navigate to the properties tab.